The practice of estimating in project management is one of the most challenging. Whether you’re trying to figure out project effort, duration or cost, given the inherent uncertainty of projects and their uniqueness, we often end up “guesstimating.” This article provides techniques to use in order to be as accurate as possible in doing your estimates. While I focus on effort estimation, the same techniques apply to duration or cost estimation.

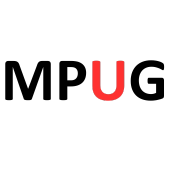

Let’s start with a reminder about how project time management is articulated, according to the PMI® PMBOK® Guide & Standards, Fifth Edition:

Analogous Estimating

This is, I think, the most common form of estimation. You base your estimate on your experiences from previous projects, otherwise known as historical data, based on lessons learned. This method is fairly accurate, when the type of work is similar (same project type, same resources, etc.). The calculation can be adjusted using parameters such as duration, budget, resources and complexity. Also, this is the method to use when you have a limited amount of information regarding the project, such as a lack of a detailed task list. The disadvantage to this approach is that the organization needs similar projects for comparison.

Parametric Estimating

This form of estimation uses a formula also based on historical data. To make it clearer, here’s an example: You know from past experience as a handyman that you require 10 hours to tile 20 square meters. Today you need to estimate how long it will take to tile 40 square meters. Your guess is 20 hours. The more sophisticated your model, the more accurate your estimates will be. For example, you can define that for every 40 square meters of tiling, you’ll also need one more hour to tile or clean or estimate that the risk of having bad tile quality increases with the larger space. As your formula becomes more advanced, your results will become more accurate. The disadvantage is the same as analogous estimating: no historical data, no parametric estimation.

Three-point Estimating

The idea is to improve upon single-point estimating by using three-point estimating, where three estimates are defined in order to take into consideration risk and uncertainty. The first estimate is a best case estimation, called Optimistic value (OP). The worst case scenario is called Pessimistic (PE). The last estimate falls between the other two and is called Most Likely (ML). Each of those may be defined using one of the previous techniques (analogous or parametric). You can define the effort as an average:

(OP+PE+ML)/3

A variation of this technique is the Program Evaluation and Review Technique or PERT analysis, which uses weighted averages for the estimates:

Expected Time = (OP+4ML+PE)/6

The disadvantage of this technique is that it’s time consuming because you have to define three estimates for each task.

Expert Judgment Estimating

In this approach you ask a knowledgeable expert to define efforts for you, based on historical information they have. Its accuracy depends on the expert and his or her background. The main pain points are two: 1) to find such an expert when you need one; and 2) to accept what the expert is telling you even when there’s no apparent rationale other than “This is what I as an expert think it should be.”

Published Data Estimating

Some organizations regularly publish their data about effort from past projects, accessible by anyone who’s a member or an employee to compare against their expected activities. While this approach can be highly accurate, it also depends on many parameters (domain, company size, culture, etc.), making it difficult to find information suited for you.

Bottom up Estimating

This is the Work Breakdown Structure (WBS) approach. You decompose your work into small packages that are more understandable and therefore simpler to estimate with greater accuracy. You aggregate those estimates at a project level to understand the whole effort. If a work package or decomposed activity can’t be estimated, you have to break it down again. The inconvenience here is that the method is time consuming.

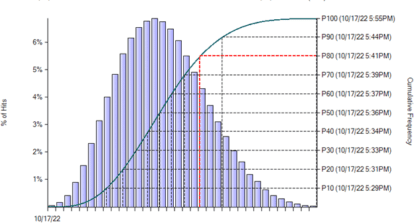

Project Management Software Simulation

Software simulation is used to model the level of uncertainty. The best known example is the Monte Carlo simulation. Instead of using numbers as input to a formula (whose result will also be numbers), the Monte Carlo method takes a distribution of numbers (such as the normal distribution) as input and gives a distribution of results as output.

Given the complexity of the implementation and the application to several project tasks, this method can be time consuming. (Practically speaking, I’ve personally never applied it to any of my projects.)

Group Decision-making

Not specifically a technique in itself so much as a collection of techniques. The idea is to work with a group of people to assess effort, duration or cost. The advantage is the sharing of experience and knowledge and also the involvement of people from the project team, which increases their commitment to the result.

Delphi Method

The Delphi method is a group decision making technique that relies on interactions within a panel of experts. Participants give their estimation to a facilitator in charge of providing an anonymous summary of expert judgments together with the related explanation. The anonymity frees participants from cognitive biases such as the halo effect or the bandwagon effect.

Planning Poker

Also called Scrum Poker, this gamification technique derives from the Delphi method, where a group of people try to reach a consensus on effort (originally used in agile techniques for story point estimation). People have a deck of numbered cards, each number corresponding to story points or days. They’re invited to put face down the card corresponding to their estimation. All cards are revealed simultaneously. The bigger and lower estimates are removed. This action is repeated until a consensus is reached (of course, anyone can modify the estimate he or she gives at each round based on the going point of view). Usage of an egg timer can help to “mark off” discussions.

Other Techniques Worth Considering

A quick browse of Wikipedia reveals any number of other techniques to consider, none of which I’ve tried, but all of which sound interesting for particular situations. Here are two that I found particularly interesting:

The constructive cost model (COCOMO) is an algorithmic software cost estimation model that uses a regression formula with parameters derived from historical project data and current and future project characteristics.

The Putnam model is an empirical software effort estimation model, in which software project data is collected and fit to a curve. The estimate is created by examining project size and calculating the associated effort using the equation.

If you ask me what I use, I’ll reply, “It depends.” I always start with some basic estimation, either analogous- or expert judgment-based. Then depending on the risks or complexity inherent to the project, I apply parametric estimating or go through the work of three-point estimating. I’ve found that breaking down tasks in smaller more understandable activities is also a very good approach. Finally, group decision making techniques help me fine-tune the estimates.

In other words, the appropriate estimation technique for your project depends on your experience, preference and many other projects and situation parameters. I recommend that you build your own technique based on what you extract from any of these methods.

Project Management Professional, PMP, PMI and PMBOK are all registered trademarks (®) of the Project Management Institute.

E K

What about Function Point estimations? Do you think they are still relevant in this day and age?

Michael

Nice overview of estimating techniques.

What is missing, and I think is pertinent when raising a discussion on estimating is an understanding of probability and how it applies to estimating; particularly when using single point estimates. You refer to PERT (as is necessary) being a 3-point estimate. I think it would be also to raise n-point estimating generally speaking. One may not want to use 3-point estimating (PERT) for various reasons; however, in my opinion 2-point estimates are useful and preferred over single point estimates.

Also, I think function point estimates for software development is worth including.

Eric Uyttewaal

Jeremy, Great overview of estimating techniques from the different industries (construction, software) and scheduling methodologies (CPM, Agile, ToC)! Thanks!

Jeremy Cottino

@Eswara

All those techniques can be applied to “Function Point estimations”. Then regarding your question about if it’s still relevant, I read the Wikipedia’s article about “Function Point estimations”, which contains a “Criticism” section you can check. I am personally not an expert of this system to judge its effectiveness, but I know people who are using it quite often for IT systems.

@Michael

Multi-point estimating, goes from 2-points as you mentioned, 3-points with PERT which I think is the most common/known one, to the Monte Carlo analysis where distributions are used as input (multitude of estimations). As for any of those techniques, the best one for you depends on your maturity on the process. 2-points estimation, I agree, might be a good compromise, as I never saw an effective usage of Monte Carlo analysis on a quite standard project.

@Eric

Thanks for your comment 🙂

@Dennis

Regarding your first point, you are totally right, duration is the last step where the first (importants) ones are 1) listing all tasks (trying not to forget any) and 2) linking them. You will have a bigger error if you forgot tasks than if you under/over estimate a unique task.

Your second point; this is why it is important to make project tracking and not only create a first plan, print it and pin it on your wall. Estimations must be updated during the project.

Last point; it is important to take into account potential errors on your estimation (which can be seen as risks management also). The “bad way” is by adding some padding to your tasks (you think it is 10, but you put 12). The good way to manage uncertainty is by adding contingency reserves at project or even task level. At the early stages, we talk about ROM (Rough Order of Magnitude) which estimates in the range of -25% to +75%, and we move to definitive estimates narrowed to -5% to +10%.

Many thanks Dennis for your comments

@Hussain

Delphi method is a group decision making technique (which mean that a group of people try to reach a consensus on a task duration for example) where a facilitator is in charge of collecting estimations from experts and share them ANONYMOUSLY. This is interesting when, in the group, there is a senior person or someone with “power” whose choice, if known, might have an impact on the estimation of others, people might think “if the expert say x, he might be correct I would say the same”. This is known as the “Halo effect”.

Planning poker is also a group decision making technique, but instead of saying or writing their estimates, experts play cards where the estimation is written. This add a gamification dimension. But of course the key is not on the card (which might be considered as useless finally) but on the group decision.

Jeremy Cottino

Many thanks Ruari for sharing your experience

Praveen Malik

Hi Jeremy,

Great comprehensive article. PMs should use all the techniques as appropriate. I also wrote a few articles on estimation describing parametric, analogous and three point. But yours is a complete article.

BR

Praveen Malik.

Jeremy Cottino

Thanks Praveen