As a long-time fan of all things aviation, I’m enamored with all 16 seasons (to date) of the TV series Mayday: Air Crash Investigation, where air disasters are dramatized and the causes for each crash are painstakingly determined.

As a long-time fan of all things aviation, I’m enamored with all 16 seasons (to date) of the TV series Mayday: Air Crash Investigation, where air disasters are dramatized and the causes for each crash are painstakingly determined.

As a project manager, I’m also interested in (but not enamored with) projects that have failed on my watch — or on the watch of the clients that I work with. In both cases, commercial airline disasters and project disasters capture my imagination on a daily basis.

Figuring out what went wrong — with both airplanes and projects — seems critical for prevention. Yet failed projects don’t get nearly the attention that failed aircraft do, even though the impact of both types of crashes can affect the lives of hundreds and waste millions of dollars in one bad blow.

Read Part 2 of “Mayday! Project Crash Investigation,” here.

Read Part 3 of “Mayday! Project Crash Investigation,” here.

Take, for example, United Flight 173, which was in route to Portland in 1978 when it simply ran out of fuel, killing 10 of the 189 on board and trashing a $6 million jumbo jet. Immediately after the disaster, it was unclear what caused the crash. The crew had reported a landing gear problem, and then the pilot began circling the airport, trying to figure out how to fix the gear. But when all four engines flamed out, there was no way to return to the runway, forcing the jet to crash land in a nearby residential area.

Eerily, that failure reminds me of a project that I had once worked on while developing software for IBM. In that case the project was mysteriously pulled from the program after the product was developed and ready to be sold. Without any explanation from management, we watched the project drop like a plane without fuel, with no idea why the project had failed — or could have been made to succeed. I suspect the reason for this project’s failure was that it simply ran out of gas (read: money) before the product could land. But without some investigation — and we were given no time for that — none of us on board ever really knew what brought that project down.

Unlike many failed projects, all failed commercial airliners are exhaustively investigated for cause. Never are pilots of commercial airlines allowed to simply move on after a crash. So why do we as project managers often do just that? OK, so we more often than not do write up a few “lessons learned,” that in all likelihood read more like sugar-coated justifications than true investigations.

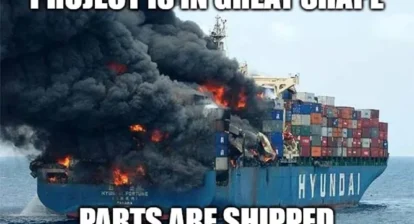

I get it. No one wants to dwell in failure when projects don’t reach goals or miss key targets. Yet I’ve seen seemingly sane project managers do what is classically called insanity — by repeating the same mistakes made in past projects during on-going projects. We often believe that if we just try and do this or that better, harder, then the outcome will improve, and our next project will just soar. Imagine an airline operating that way (no don’t — the images would be horrific).

In that light, this three-part series of articles explores how commercial aviation, which has improved so dramatically over time, can be used as a model for improving our next project. We’ll also explore how to use similar investigative techniques to ensure project safety and reliability — before our projects fail!

The Problem: More Projects Fail Than Fly

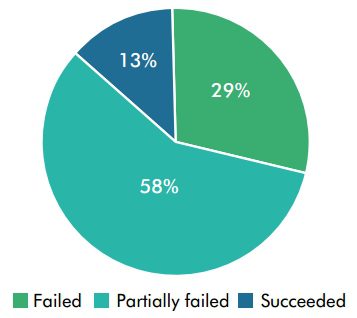

Statistics show that more often than not, projects fail, regardless of type or sector. For example, The World Bank recently reported that large-scale information and communication technology (ICT) projects (each worth over $6 million) fail or partially fail at a rate of 71 percent, leaving only 29 percent being completed successfully. Compare that with recent airline statistics, where a failure in this case is defined as a flight with at least one death: The failure rate is just .00003 percent — a success rate of near perfection.

When I asked one of the authors of this alarming World Bank report why these projects failed at such a high rate, I was referred to the report’s lengthy and dense policy recommendations — but I couldn’t find anything specific or otherwise useful, such as human error or poor project-design. One can only conclude that specific and actionable reasons “why” are beyond comprehension — and out of the realm of mere mortal readers. I suspect this is consistent with what you see in your line of work as well.

To Prevent Future Failures, We Need To Know Why

As project managers, we need to know why our projects fail (or partially fail) in order to prevent a repeat of the same mistakes on our next project, which would ultimately help more people (in the case of development projects) and save money (in the case of all projects). Two tools commonly deployed come to mind:

- Lessons learned from past projects; and

- Risk management and monitoring and evaluation plans for current projects.

Yet take this definition of what a “lesson learned” is:

“A lesson learned is knowledge or understanding gained by experience that has a significant impact for an organization. The experience may be either positive or negative. Successes are also sources of lessons learned… Lessons learned systems tend to be more organization-specific than alert systems.”

As a long-time project manager and an armchair air-crash investigator, this definition of a lesson learned chills me to the bone.

Human nature dictates that in our professional environments we will focus more on our successes than on our failures and that as human beings we focus more on positive outcomes than negative ones. After all, this is almost the definition of human evolution. (Of course, this doesn’t apply to today’s social media, where we tend to behave in opposition to positive outlooks and success stories and prefer to be critical of just about anything posted; just see Twitter.)

Taking the lessons learned from any failed project, you will most likely read more about the positives of the failure than the concrete reasons for the failure — in other words, you get a sugar coating of whatever happened and nothing more substantial, such as concrete data that shows precise project failure points.

But the definition above on lessons learned does use two words that make my heart stop: alert systems. In this context, the author of this definition is referring to a risk management and mitigation system, and perhaps even the monitoring and evaluation systems that any large-scale project would employ.

These tools are used to evaluate project risks, mitigate disaster and constantly monitor project progress. Unfortunately, these tools are often drawn up in isolation of any lessons learned or don’t systematically take into account all (or even most) data from past or like endeavors — because the data for failures in the past are never fully or easily accessible. And after all, we are mostly focused on the future — all eyes ahead…

What would be better is to have a set of true alert systems, as found on any modern airliner and within the commercial aviation sector as a whole, which employs a wide array of useful tools that include:

- Onboard flight systems that constantly monitor every aspect of the mechanical operations of the plane. Imagine if you had this built into every on-going project, alerting you to any potential problem before it becomes a catastrophe!

- Traffic collision avoidance and runway safety systems that monitor an aircraft in commercial space. Imagine if you had this to manage your portfolio of projects currently in the air or preparing for takeoff!

- Enhanced ground proximity warning systems that monitor an aircraft in relationship to the ground. Imagine if your organization had a system that was monitoring all projects in relationship to your organization’s topology (resources, goals, mandate, etc.)!

- Crew resource management systems that — unlike what you might expect — don’t manage who is available for work, but do manage the interaction between the crew and improves the human factors of flying. Imagine if you had this installed in your project management office, helping teams work better, smarter, together!

These are just a few examples of the many checks and balances deployed by the aviation industry. I provide them here as food for thought for those in the project development and implementation industry.

In part 2 of this short series we’ll explore more aspects of these aviation safety systems and look at precisely what the aviation industry does after a crash — correlating what you can do likewise, the next time one of your project runs into a glitch or, worse, crashes and burns.

In part 3 we’ll explore setting up your own “project safety board,” which will act just like the many national transportation safety boards around the globe, all doing great investigative work.

Image credits:

World Development Report 2016: Digital Dividends

Tombstone: Mike Licht

Tom Smith

With reference t the project with IBM that was pulled from the program. From what little information id given I assume that it was cancelled by management somewhere, at some level. I’m not sure that from a PM prospective it is a failed project. If a project is running hot, straight, and normal and is cancelled it could be for any number of reasons.Perhaps market conditions changed. Maybe something with a higher priority took precedence. Change in government regulation perhaps?

As for the value of good lessons learned studies, I agree completely. Looking at a project postmortem is a bit like working up a risk assessment at the project’s start. Too many managers assume that bad things won’t happen to their project because … well, I never get a really good explanation of this. It’s just that it won’t happen to me/us/our project.

ED YEE

Management do not know how to manage, organizations are not trained or setup on a PM methodology to manage projects on an ongoing basis. Stake holders are not holding

responsible individuals accountable for the success of a project (and providing the

necessary resources for success – review, pain, accountability, risk management). The project

postmortem is not taken seriously and results not rewarded.

Maybe corporate “governess” for projects structured enterprises need to be a primary focus

of the organization. With matrix managed projects, nobody is responsible, the project team and hierarchical managers report to different lines of management and goals, With DOD

projects, the business director is typically the program manager….everybody reports to the program manager who owns the P&L. The organization is project focused, ‘support’ organizations are there to serve the project objectives. Postmortem audits might be

served better by an audit function to report back to top management, like the case with the

FAA, The project team is off to a new project on completion.

Jigs Gaton

@ Tom Smith: very good points. On cancelled projects, assuming it was realized that during the later delivery stage, the project was untenable for financial reasons or did not fit into the organization’s portfolio – I’d call that a failure! Better financial management with insight into the organization’s portfolio would have prevented the project from exiting the inception phase, hence saving a ton of money and people hours.

On “that just won’t happen to me/us- so true! We are built that way, so overly optimistic to the point of peril. But the pilots that I know don’t board a plane thinking that way – just the opposite.

Jigs Gaton

@ ED YEE: yes, all systems of an impending crash. And a mandatory audit with a failure report back to top management does seem prudent, eh? Stay tuned for Part 2 and Part 3 of “Mayday: Project Crash Investigation” that will address some of these issues.

Nitin Sharma

Great comparison , a good project governance model in the organization should enforce the post mortem of each project with meaningful lessons learned supported by facts and data points . The guidelines from the results of lessons learned should be communicated to all project managers or be made part of training modules .

Looking forward to next two parts of the series …